简单使用Loki

简单使用 Loki

安装

获取Loki、Promtail二进制包

# promtail 跟 filebeat 等类似所谓采集端使用

wget https://github.com/grafana/loki/releases/download/v3.2.1/promtail-linux-amd64.zip

# loki 跟 elasticsearch 类似所谓服务端使用,没有自己页面需要结合 grafana 就跟结合 kibana 类似

wget https://github.com/grafana/loki/releases/download/v3.2.1/loki-linux-amd64.zip

配置两者的配置文件

# cat promtail-local-config.yaml

# 配置 positions 存放了 job 里 文件路径和采集数量

# client 配置了 loki 地址

server:

http_listen_port: 9080

grpc_listen_port: 0

positions:

filename: /tmp/positions.yaml

clients:

- url: http://localhost:3100/loki/api/v1/push

scrape_configs:

- job_name: system

static_configs:

- targets:

- localhost

labels:

job: varlogs

__path__: /var/log/*log

stream: stdout

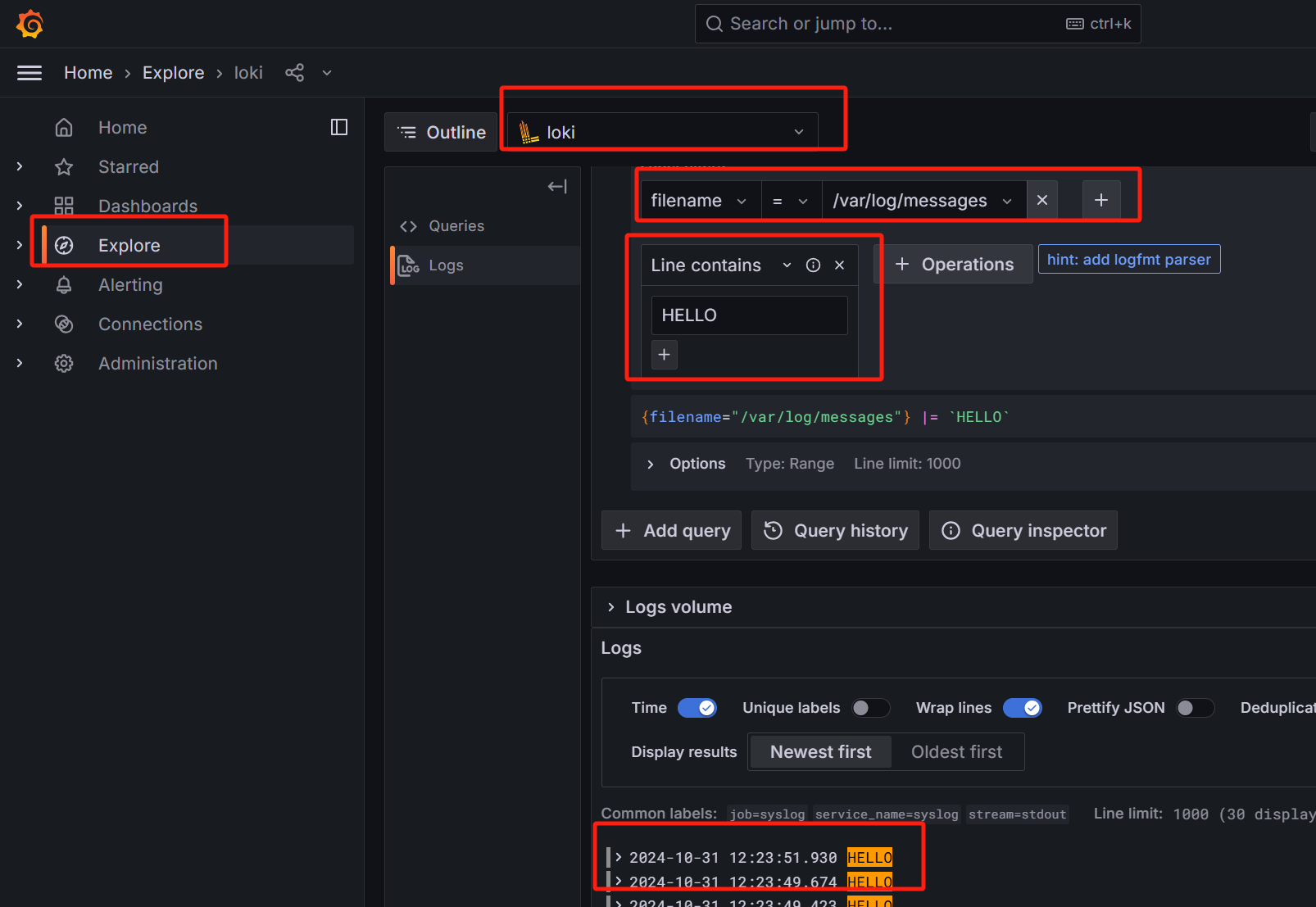

- job_name: syslog

static_configs:

- targets:

- localhost

labels:

job: syslog

__path__: /var/log/messages

stream: stdout

# cat loki-local-cofig.yaml

# loki http 不是 ui 地址

# storage 可以配置不通类型存储

auth_enabled: false

server:

http_listen_port: 3100

grpc_listen_port: 9096

log_level: debug

grpc_server_max_concurrent_streams: 1000

common:

instance_addr: 127.0.0.1

path_prefix: /tmp/loki

storage:

filesystem:

chunks_directory: /tmp/loki/chunks

rules_directory: /tmp/loki/rules

replication_factor: 1

ring:

kvstore:

store: inmemory

query_range:

results_cache:

cache:

embedded_cache:

enabled: true

max_size_mb: 100

schema_config:

configs:

- from: 2020-10-24

store: tsdb

object_store: filesystem

schema: v13

index:

prefix: index_

period: 24h

pattern_ingester:

enabled: true

metric_aggregation:

enabled: true

loki_address: localhost:3100

ruler:

alertmanager_url: http://localhost:9093

frontend:

encoding: protobuf

# By default, Loki will send anonymous, but uniquely-identifiable usage and configuration

# analytics to Grafana Labs. These statistics are sent to https://stats.grafana.org/

#

# Statistics help us better understand how Loki is used, and they show us performance

# levels for most users. This helps us prioritize features and documentation.

# For more information on what's sent, look at

# https://github.com/grafana/loki/blob/main/pkg/analytics/stats.go

# Refer to the buildReport method to see what goes into a report.

#

# If you would like to disable reporting, uncomment the following lines:

#analytics:

# reporting_enabled: false

配置两者的Systemd管理

[root@kubernetes loki]# cat /etc/systemd/system/promtail.service

[Unit]

Description=promtail server

Wants=network-online.target

After=network-online.target

[Service]

ExecStart=/root/loki/promtail-linux-amd64 -config.file=/root/loki/promtail-local-config.yaml

StandardOutput=syslog

StandardError=syslog

SyslogIdentifier=promtail

[Install]

WantedBy=default.target

[root@kubernetes loki]# cat /etc/systemd/system/loki.service

[Unit]

Description=loki server

Wants=network-online.target

After=network-online.target

[Service]

ExecStart=/root/loki/loki-linux-amd64 -config.file=/root/loki/loki-local-cofig.yaml

StandardOutput=syslog

StandardError=syslog

SyslogIdentifier=loki

[Install]

WantedBy=default.target

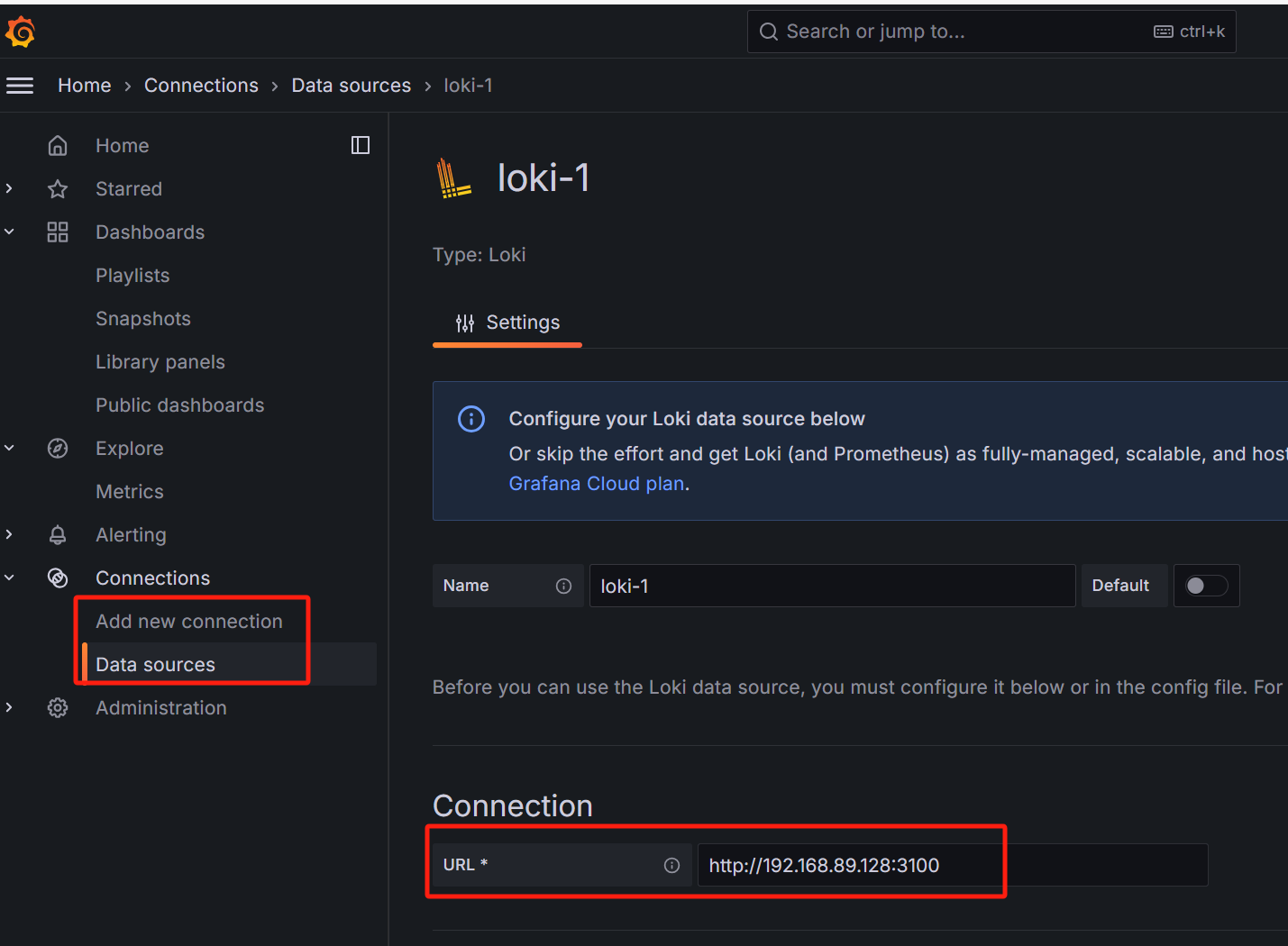

对接 Grafana

对接 K8S

Loki适配云原生,对接K8S采集Pod日志,主要是通过Promtail采集。在它的配置文件中选择kubernetes_sd_configs实现

本质上来讲,他是采集的是本地文件系统/var/log/pods/*/*.log下内容

server:

http_listen_port: 9080

grpc_listen_port: 0

positions:

filename: /tmp/positions.yaml

clients:

- url: http://localhost:3100/loki/api/v1/push

scrape_configs:

- job_name: system

static_configs:

- targets:

- localhost

labels:

job: varlogs

__path__: /var/log/*log

stream: stdout

- job_name: syslog

static_configs:

- targets:

- localhost

labels:

job: syslog

__path__: /var/log/messages

stream: stdout

- job_name: kubernetes-pods-name

pipeline_stages:

- cri: {}

kubernetes_sd_configs:

- role: pod

kubeconfig_file: /root/.kube/config

relabel_configs:

- action: replace

replacement: $1

separator: /

source_labels:

- __meta_kubernetes_namespace

- __service__

target_label: job

- replacement: /var/log/pods/*$1/*.log

separator: /

source_labels:

- __meta_kubernetes_pod_uid

- __meta_kubernetes_pod_container_name

target_label: __path__