HPA使用自定义指标

2024-10-30

使用 Prometheus-Adapter 实现 HPA 使用 Custom Metrics 完成动态扩缩容

之前使用kube-prometheus发现它有非常多的yaml文件,其中就有Adapter服务

Adapter提供了custom metrics API,实现HPA通过Adapter查询Prometheus中指标进行动态扩缩容

[root@kubernetes ~]# kubectl get pod -n monitoring

NAME READY STATUS RESTARTS AGE

alertmanager-main-0 2/2 Running 0 41h

alertmanager-main-1 2/2 Running 0 41h

alertmanager-main-2 2/2 Running 0 41h

grafana-564bd845f6-z4qsd 1/1 Running 0 41h

kube-state-metrics-66db74d6dc-nld6q 3/3 Running 0 40h

kube-state-metrics-66db74d6dc-w74mz 3/3 Running 0 40h

node-exporter-jxptq 2/2 Running 0 41h

prometheus-adapter-6c6c4dd85-6br96 1/1 Running 0 13h

prometheus-adapter-6c6c4dd85-q4pw9 1/1 Running 0 13h

prometheus-k8s-0 2/2 Running 0 39h

prometheus-k8s-1 2/2 Running 0 39h

prometheus-operator-d9b65cf6f-drlbq 2/2 Running 0 41h

配置 Custom Metrics API

需要创建一个APIService把Adapter的服务通过API Server进行代理

apiVersion: apiregistration.k8s.io/v1

kind: APIService

metadata:

labels:

app.kubernetes.io/component: metrics-adapter

app.kubernetes.io/name: prometheus-adapter

app.kubernetes.io/part-of: kube-prometheus

app.kubernetes.io/version: 0.12.0

name: v1beta1.custom.metrics.k8s.io

spec:

group: custom.metrics.k8s.io

groupPriorityMinimum: 100

insecureSkipTLSVerify: true

service:

name: prometheus-adapter

namespace: monitoring

port: 443

version: v1beta1

versionPriority: 100

创建完成后 APIServices 就会出现v1beta1.custom.metrics.k8s.io API,此时还不能用,需要更新ConfigMap文件使用Prometheus指标

[root@kubernetes ~]# kubectl get APIServices

NAME SERVICE AVAILABLE AGE

...

v1alpha1.monitoring.coreos.com Local True 42h

v1beta1.custom.metrics.k8s.io monitoring/prometheus-adapter False (FailedDiscoveryCheck) 14h

v1beta1.metrics.k8s.io monitoring/prometheus-adapter True 43h

...

v1beta3.flowcontrol.apiserver.k8s.io Local True 43h

v2.autoscaling Local True 43h

配置 ConfigMap 记录指标

需要配置Prometheus的记录指标,编写方式有固定格式

-

seriesQuery:定义了要从

prometheus中查询的指标,默认__name__中填写指标名称 -

resources:定义了

pod与namespace,会映射成PromQL中的标签- overrides:对

namespace和pod这两个标签进行了映射,指定了它们分别对应Kubernetes中的namespace和pod

- overrides:对

-

name:相当于重命名,重命名成

HPA里需要配置的指标,此处配置成network_receive -

metricsQuery:定义了具体的

PromQL表达式-

<<.Series>>:为

seriesQuery定义的查询指标 -

<<.LabelMatchers>>:为

resources中定义的标签选择器的查询标签 -

<<.GroupBy>>:为实际的分组标签

-

apiVersion: v1

data:

config.yaml: |-

// rules 到 metricsQuery 都为新增内容,其余内容都是 Prometheus 自带的

// 有通用格式

"rules":

- seriesQuery: '{__name__="container_network_receive_bytes_total"}'

seriesFilters: []

resources:

overrides:

namespace:

resource: namespace

pod:

resource: pods

name:

matches: "^(.*)_bytes_total"

as: "network_receive"

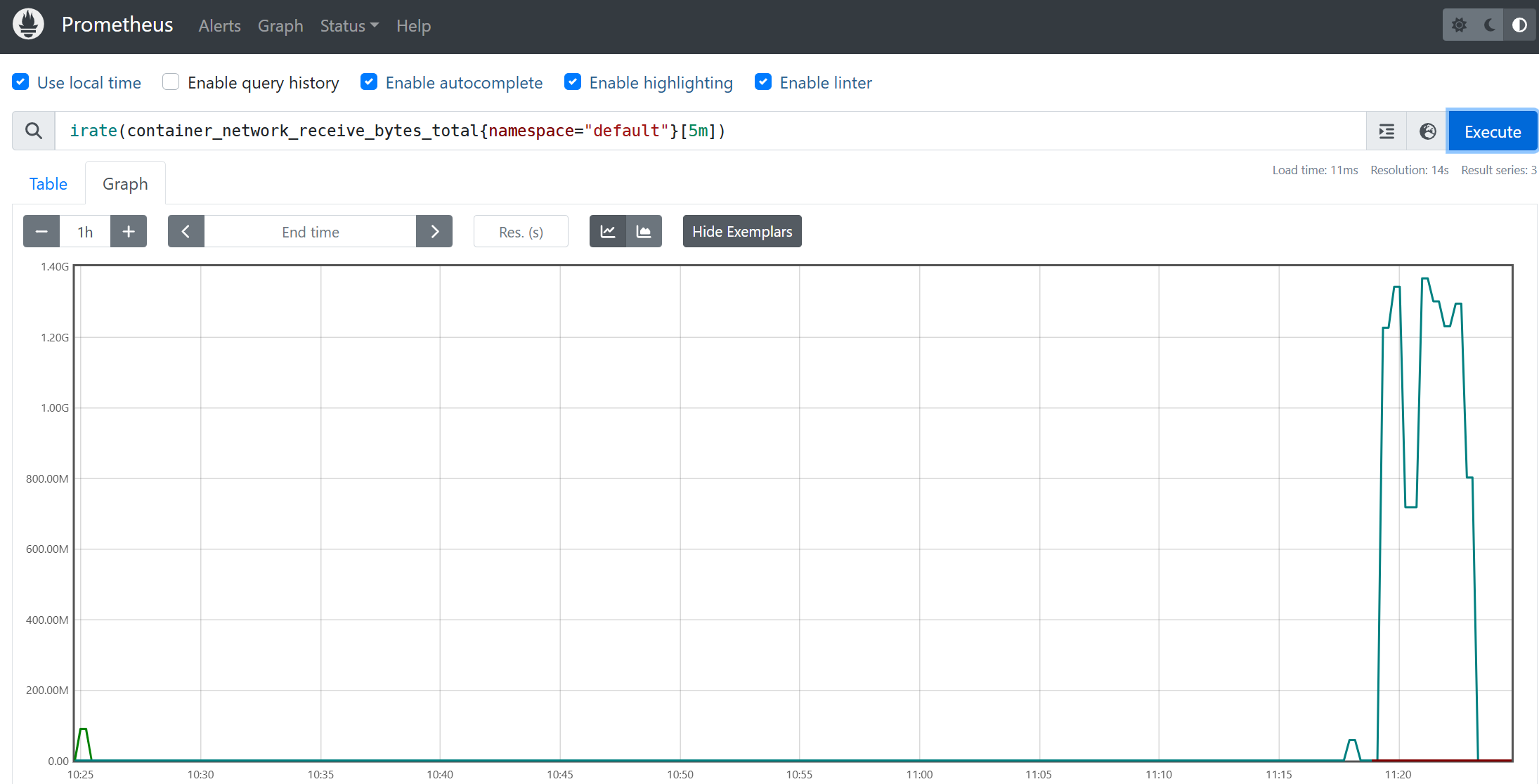

metricsQuery: irate(<<.Series>>{<<.LabelMatchers>>}[5m])

"resourceRules":

"cpu":

"containerLabel": "container"

"containerQuery": |

sum by (<<.GroupBy>>) (

irate (

container_cpu_usage_seconds_total{<<.LabelMatchers>>,container!="",pod!=""}[120s]

)

)

...

"memory":

"containerLabel": "container"

"containerQuery": |

sum by (<<.GroupBy>>) (

container_memory_working_set_bytes{<<.LabelMatchers>>,container!="",pod!=""}

)

...

"window": "5m"

kind: ConfigMap

metadata:

labels:

...

name: adapter-config

namespace: monitoring

重启Adapter服务后APIServices也已经正常

[root@kubernetes adapter]# kubectl get APIServices

NAME SERVICE AVAILABLE AGE

v1. Local True 43h

v1.admissionregistration.k8s.io Local True 43h

v1.apiextensions.k8s.io Local True 43h

v1.apps Local True 43h

v1.authentication.k8s.io Local True 43h

v1.authorization.k8s.io Local True 43h

v1.autoscaling Local True 43h

v1.batch Local True 43h

v1.certificates.k8s.io Local True 43h

v1.coordination.k8s.io Local True 43h

v1.discovery.k8s.io Local True 43h

v1.events.k8s.io Local True 43h

v1.monitoring.coreos.com Local True 42h

v1.networking.k8s.io Local True 43h

v1.node.k8s.io Local True 43h

v1.policy Local True 43h

v1.rbac.authorization.k8s.io Local True 43h

v1.scheduling.k8s.io Local True 43h

v1.storage.k8s.io Local True 43h

v1alpha1.monitoring.coreos.com Local True 42h

v1beta1.custom.metrics.k8s.io monitoring/prometheus-adapter True 14h

v1beta1.metrics.k8s.io monitoring/prometheus-adapter True 43h

v1beta1.metrics.pixiu.io pixiu-system/pixiu-metrics-scraper True 43h

v1beta2.flowcontrol.apiserver.k8s.io Local True 43h

v1beta3.flowcontrol.apiserver.k8s.io Local True 43h

v2.autoscaling Local True 43h

验证

在APIServices正常后,可以通过API查看Prometheus Metrics指标

[root@kubernetes ~]# kubectl get --raw "/apis/custom.metrics.k8s.io/v1beta1" | jq

{

"kind": "APIResourceList",

"apiVersion": "v1",

"groupVersion": "custom.metrics.k8s.io/v1beta1",

"resources": [

{

"name": "namespaces/network_receive",

"singularName": "",

"namespaced": false,

"kind": "MetricValueList",

"verbs": [

"get"

]

},

{

"name": "pods/network_receive",

"singularName": "",

"namespaced": true,

"kind": "MetricValueList",

"verbs": [

"get"

]

}

]

}

此时发现该Pod已经有了network_receive监控指标,可以进行HPA测试了

[root@kubernetes ~]# kubectl get --raw "/apis/custom.metrics.k8s.io/v1beta1/namespaces/default/pods/*/network_receive" | jq

{

"kind": "MetricValueList",

"apiVersion": "custom.metrics.k8s.io/v1beta1",

"metadata": {},

"items": [

{

"describedObject": {

"kind": "Pod",

"namespace": "default",

"name": "hpa-demo-755f7fbb8f-966n9",

"apiVersion": "/v1"

},

"metricName": "network_receive",

"timestamp": "2024-10-30T03:05:26Z",

"value": "0",

"selector": null

}

]

}

测试

创建测试用例,整体apply一下

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: hpa-demo

spec:

selector:

matchLabels:

app: hpa

template:

metadata:

labels:

app: hpa

spec:

containers:

- name: iperf

image: moutten/iperf

---

apiVersion: v1

kind: Service

metadata:

name: hpa-demo

spec:

ports:

- port: 5201

protocol: TCP

targetPort: 5201

selector:

app: hpa

type: NodePort

---

apiVersion: autoscaling/v2

kind: HorizontalPodAutoscaler

metadata:

name: hpa-demo

spec:

maxReplicas: 10

metrics:

- type: Pods

pods:

metric:

name: network_receive

target:

type: Value

averageValue: 10000

minReplicas: 1

scaleTargetRef:

apiVersion: apps/v1

kind: Deployment

name: hpa-demo

查看状态

[root@kubernetes ~]# kubectl get pod

NAME READY STATUS RESTARTS AGE

hpa-demo-755f7fbb8f-966n9 1/1 Running 0 65m

[root@kubernetes ~]# kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

hpa-demo NodePort 10.254.237.91 <none> 5201:32479/TCP 70s

kubectl ClusterIP 10.254.174.106 <none> 80/TCP 20h

kubernetes ClusterIP 10.254.0.1 <none> 443/TCP 44h

[root@kubernetes ~]# kubectl get hpa

NAME REFERENCE TARGETS MINPODS MAXPODS REPLICAS AGE

hpa-demo Deployment/hpa-demo 0/10k 1 10 1 14h

使用iperf3进行压测

# 本机方便安装 iperf3,通过 NodePort 端口访问 svc

Connecting to host 192.168.89.128, port 32479

[ 5] local 192.168.89.128 port 36808 connected to 192.168.89.128 port 32479

[ ID] Interval Transfer Bitrate Retr Cwnd

[ 5] 0.00-1.00 sec 1.15 GBytes 9.87 Gbits/sec 1 2.81 MBytes

[ 5] 1.00-2.00 sec 1.18 GBytes 10.1 Gbits/sec 0 2.81 MBytes

[ 5] 2.00-3.01 sec 1.17 GBytes 9.97 Gbits/sec 0 2.81 MBytes

[ 5] 3.01-4.00 sec 1.16 GBytes 10.0 Gbits/sec 0 2.81 MBytes

[ 5] 4.00-5.01 sec 1.16 GBytes 9.87 Gbits/sec 0 2.81 MBytes

[ 5] 5.01-6.00 sec 1.15 GBytes 10.0 Gbits/sec 0 2.81 MBytes

[ 5] 6.00-7.01 sec 1.18 GBytes 10.0 Gbits/sec 0 2.81 MBytes

[ 5] 7.01-8.00 sec 1.17 GBytes 10.1 Gbits/sec 0 2.81 MBytes

[ 5] 8.00-9.01 sec 1.16 GBytes 9.92 Gbits/sec 0 2.81 MBytes

[ 5] 9.01-10.00 sec 1.16 GBytes 10.0 Gbits/sec 0 2.81 MBytes

[ 5] 10.00-11.00 sec 1.16 GBytes 9.96 Gbits/sec 0 2.81 MBytes

HPA可以通过Custom Metrics API继续自定义指标动态扩缩容

[root@kubernetes ~]# kubectl get hpa

NAME REFERENCE TARGETS MINPODS MAXPODS REPLICAS AGE

hpa-demo Deployment/hpa-demo 358648919794m/10k 1 10 10 14h

[root@kubernetes ~]# kubectl get pod

NAME READY STATUS RESTARTS AGE

hpa-demo-755f7fbb8f-8vhx2 0/1 Pending 0 2m50s

hpa-demo-755f7fbb8f-966n9 1/1 Running 0 70m

hpa-demo-755f7fbb8f-bt899 0/1 Pending 0 2m35s

hpa-demo-755f7fbb8f-bw7q5 0/1 Pending 0 2m50s

hpa-demo-755f7fbb8f-dtqww 1/1 Running 0 3m5s

hpa-demo-755f7fbb8f-gtgdg 0/1 Pending 0 2m50s

hpa-demo-755f7fbb8f-k9pqn 0/1 Pending 0 3m5s

hpa-demo-755f7fbb8f-lzdsl 0/1 Pending 0 3m5s

hpa-demo-755f7fbb8f-t4nwv 0/1 Pending 0 2m35s

hpa-demo-755f7fbb8f-xjc5n 0/1 Pending 0 2m50s