DaemonSet

2023-12-05

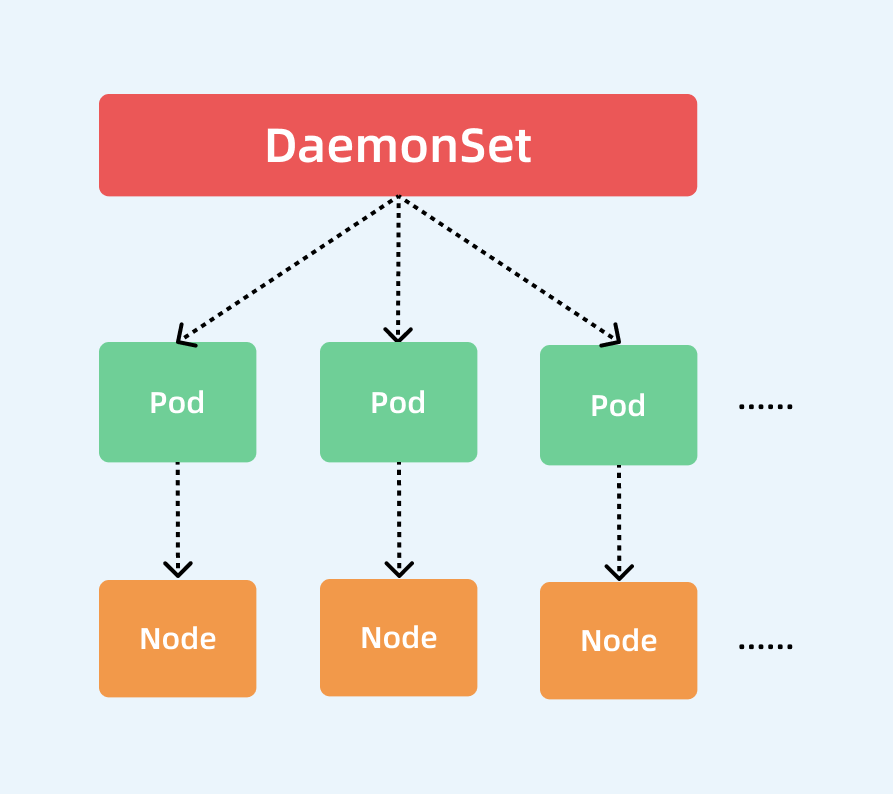

DaemonSet 用于在每个节点部署一个 Pod,随着集群节点新增而自动新增 Pod,随着集群节点删除而删除 Pod,通常情况下 Master 节点没有,因为 Master 节点通常存在污点,在没有特殊设置的情况下不会运行

那么在哪种情况下我们会需要用到这种业务场景呢?其实这种场景还是比较普通的,比如:

- 集群存储守护程序,如 glusterd、ceph 要部署在每个节点上以提供持久性存储;

- 节点监控守护进程,如 Prometheus 监控集群,可以在每个节点上运行一个 node-exporter 进程来收集监控节点的信息;

- 日志收集守护程序,如 fluentd 或 logstash,在每个节点上运行以收集容器的日志

- 节点网络插件,比如 flannel、calico,在每个节点上运行为 Pod 提供网络服务。

使用 DaemonSet 创建的服务,可以在调度服务没有起来是使用,因为 DaemonSet 控制器会默认给 Pod 指定 .spec.nodeName

DaemonSet 资源实例:

# nginx-ds.yaml

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: nginx-ds

namespace: default

spec:

selector:

matchLabels:

k8s-app: nginx

template:

metadata:

labels:

k8s-app: nginx

spec:

containers:

- image: nginx:1.7.9

name: nginx

ports:

- name: http

containerPort: 80

使用实例创建资源,并且查看 Pod 列表

[root@kube01 ds]# kubectl apply -f nginx-ds.yaml

daemonset.apps/nginx-ds created

[root@kube01 ds]# kubectl get pod -l k8s-app=nginx

NAME READY STATUS RESTARTS AGE

nginx-ds-4vnht 1/1 Running 0 57s

这是我们发现 Pod 只有一个,但是我们的集群却有两个节点,这是因为 Master 节点存在污点,需要给 DaemonSet 设置容忍污点

# nginx-ds.yaml

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: nginx-ds

namespace: default

spec:

selector:

matchLabels:

k8s-app: nginx

template:

metadata:

labels:

k8s-app: nginx

spec:

containers:

- image: nginx:1.7.9

name: nginx

ports:

- name: http

containerPort: 80

tolerations:

- key: "node-role.kubernetes.io/master"

operator: "Exists"

effect: "NoSchedule"

此时发现 Master 节点也已经存在 Pod

通过 describe 查看 Pod 发现 Controlled By 属于 DaemonSet,由此发现 DaemonSet 也是直接控制 Pod

[root@kube01 ds]# kubectl describe pod nginx-ds-2nx9s

Name: nginx-ds-2nx9s

Namespace: default

Priority: 0

Node: kube01/192.168.17.42

Start Time: Tue, 13 Jun 2023 14:48:44 +0800

Labels: controller-revision-hash=867c95757d

k8s-app=nginx

pod-template-generation=2

Annotations: <none>

Status: Running

IP: 172.30.0.6

IPs:

IP: 172.30.0.6

Controlled By: DaemonSet/nginx-ds

Containers:

nginx:

Container ID: docker://60800248d0edd65034bc5ec3a26f9c87549182ab5bca7e0eddc98f2bd07ea74b

Image: nginx:1.7.9

Image ID: docker-pullable://nginx@sha256:e3456c851a152494c3e4ff5fcc26f240206abac0c9d794affb40e0714846c451

Port: 80/TCP

Host Port: 0/TCP

State: Running

Started: Tue, 13 Jun 2023 14:48:51 +0800

Ready: True

Restart Count: 0

Environment: <none>

Mounts:

/var/run/secrets/kubernetes.io/serviceaccount from kube-api-access-2pfll (ro)

Conditions:

Type Status

Initialized True

Ready True

ContainersReady True

PodScheduled True

Volumes:

kube-api-access-2pfll:

Type: Projected (a volume that contains injected data from multiple sources)

TokenExpirationSeconds: 3607

ConfigMapName: kube-root-ca.crt

ConfigMapOptional: <nil>

DownwardAPI: true

QoS Class: BestEffort

Node-Selectors: <none>

Tolerations: node-role.kubernetes.io/master:NoSchedule op=Exists

node.kubernetes.io/disk-pressure:NoSchedule op=Exists

node.kubernetes.io/memory-pressure:NoSchedule op=Exists

node.kubernetes.io/not-ready:NoExecute op=Exists

node.kubernetes.io/pid-pressure:NoSchedule op=Exists

node.kubernetes.io/unreachable:NoExecute op=Exists

node.kubernetes.io/unschedulable:NoSchedule op=Exists

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Scheduled 80s default-scheduler Successfully assigned default/nginx-ds-2nx9s to kube01

Normal Pulling 80s kubelet Pulling image "nginx:1.7.9"

Normal Pulled 73s kubelet Successfully pulled image "nginx:1.7.9" in 6.478137132s

Normal Created 73s kubelet Created container nginx

Normal Started 73s kubelet Started container nginx